Artificial Intelligence

Category: Amazon SageMaker Studio

Implement user-level access control for multi-tenant ML platforms on Amazon SageMaker AI

In this post, we discuss permission management strategies, focusing on attribute-based access control (ABAC) patterns that enable granular user access control while minimizing the proliferation of AWS Identity and Access Management (IAM) roles. We also share proven best practices that help organizations maintain security and compliance without sacrificing operational efficiency in their ML workflows.

Supercharge your AI workflows by connecting to SageMaker Studio from Visual Studio Code

AI developers and machine learning (ML) engineers can now use the capabilities of Amazon SageMaker Studio directly from their local Visual Studio Code (VS Code). With this capability, you can use your customized local VS Code setup, including AI-assisted development tools, custom extensions, and debugging tools while accessing compute resources and your data in SageMaker Studio. In this post, we show you how to remotely connect your local VS Code to SageMaker Studio development environments to use your customized development environment while accessing Amazon SageMaker AI compute resources.

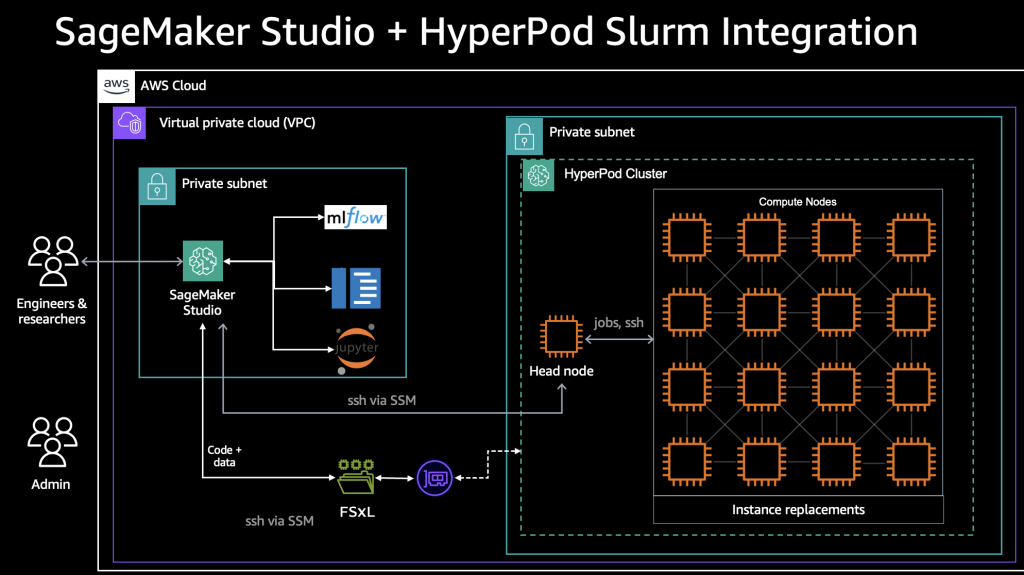

Accelerate foundation model training and inference with Amazon SageMaker HyperPod and Amazon SageMaker Studio

In this post, we discuss how SageMaker HyperPod and SageMaker Studio can improve and speed up the development experience of data scientists by using IDEs and tooling of SageMaker Studio and the scalability and resiliency of SageMaker HyperPod with Amazon EKS. The solution simplifies the setup for the system administrator of the centralized system by using the governance and security capabilities offered by the AWS services.

Deploy Amazon SageMaker Projects with Terraform Cloud

In this post you define, deploy, and provision a SageMaker Project custom template purely in Terraform. With no dependencies on other IaC tools, you can now enable SageMaker Projects strictly within your Terraform Enterprise infrastructure.

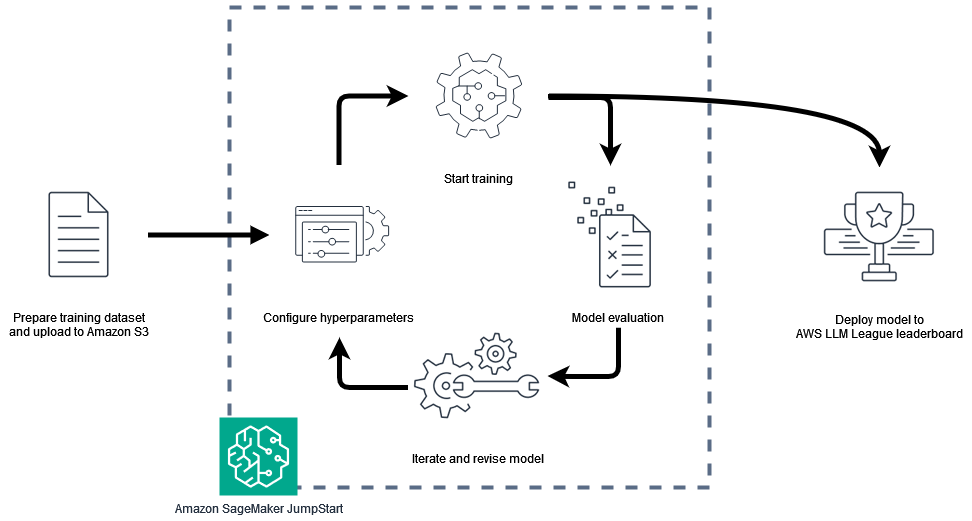

InterVision accelerates AI development using AWS LLM League and Amazon SageMaker AI

This post demonstrates how AWS LLM League’s gamified enablement accelerates partners’ practical AI development capabilities, while showcasing how fine-tuning smaller language models can deliver cost-effective, specialized solutions for specific industry needs.

NeMo Retriever Llama 3.2 text embedding and reranking NVIDIA NIM microservices now available in Amazon SageMaker JumpStart

Today, we are excited to announce that the NeMo Retriever Llama3.2 Text Embedding and Reranking NVIDIA NIM microservices are available in Amazon SageMaker JumpStart. With this launch, you can now deploy NVIDIA’s optimized reranking and embedding models to build, experiment, and responsibly scale your generative AI ideas on AWS. In this post, we demonstrate how to get started with these models on SageMaker JumpStart.

Time series forecasting with LLM-based foundation models and scalable AIOps on AWS

In this blog post, we will guide you through the process of integrating Chronos into Amazon SageMaker Pipeline using a synthetic dataset that simulates a sales forecasting scenario, unlocking accurate and efficient predictions with minimal data.

How Rocket Companies modernized their data science solution on AWS

In this post, we share how we modernized Rocket Companies’ data science solution on AWS to increase the speed to delivery from eight weeks to under one hour, improve operational stability and support by reducing incident tickets by over 99% in 18 months, power 10 million automated data science and AI decisions made daily, and provide a seamless data science development experience.

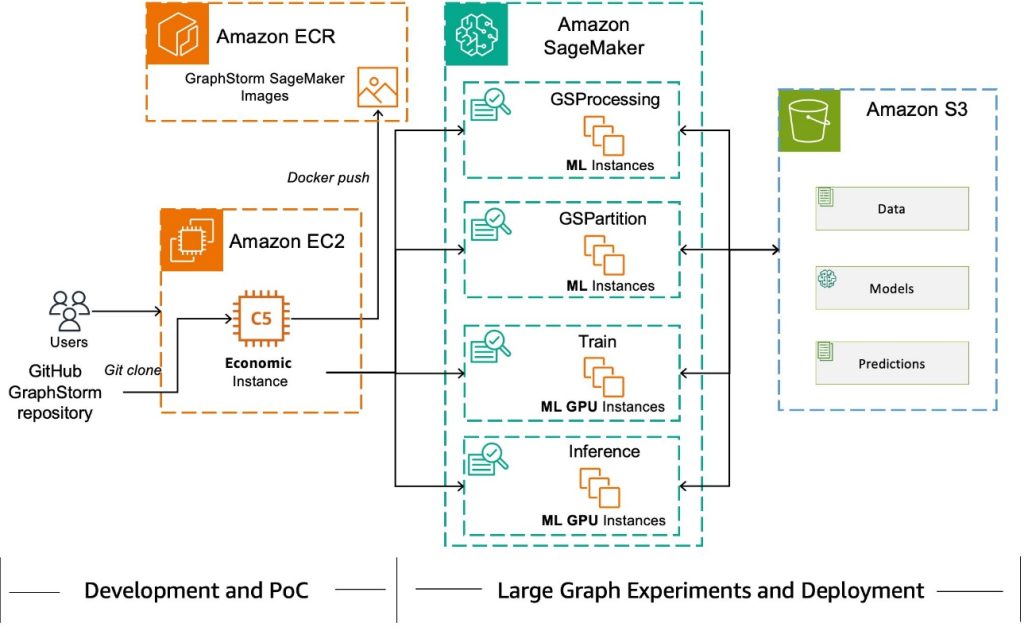

Faster distributed graph neural network training with GraphStorm v0.4

GraphStorm is a low-code enterprise graph machine learning (ML) framework that provides ML practitioners a simple way of building, training, and deploying graph ML solutions on industry-scale graph data. In this post, we demonstrate how GraphBolt enhances GraphStorm’s performance in distributed settings. We provide a hands-on example of using GraphStorm with GraphBolt on SageMaker for distributed training. Lastly, we share how to use Amazon SageMaker Pipelines with GraphStorm.

Streamline custom environment provisioning for Amazon SageMaker Studio: An automated CI/CD pipeline approach

In this post, we show how to create an automated continuous integration and delivery (CI/CD) pipeline solution to build, scan, and deploy custom Docker images to SageMaker Studio domains. You can use this solution to promote consistency of the analytical environments for data science teams across your enterprise.