Artificial Intelligence

Category: Amazon SageMaker

Evaluating generative AI models with Amazon Nova LLM-as-a-Judge on Amazon SageMaker AI

Evaluating the performance of large language models (LLMs) goes beyond statistical metrics like perplexity or bilingual evaluation understudy (BLEU) scores. For most real-world generative AI scenarios, it’s crucial to understand whether a model is producing better outputs than a baseline or an earlier iteration. This is especially important for applications such as summarization, content generation, […]

Building enterprise-scale RAG applications with Amazon S3 Vectors and DeepSeek R1 on Amazon SageMaker AI

Organizations are adopting large language models (LLMs), such as DeepSeek R1, to transform business processes, enhance customer experiences, and drive innovation at unprecedented speed. However, standalone LLMs have key limitations such as hallucinations, outdated knowledge, and no access to proprietary data. Retrieval Augmented Generation (RAG) addresses these gaps by combining semantic search with generative AI, […]

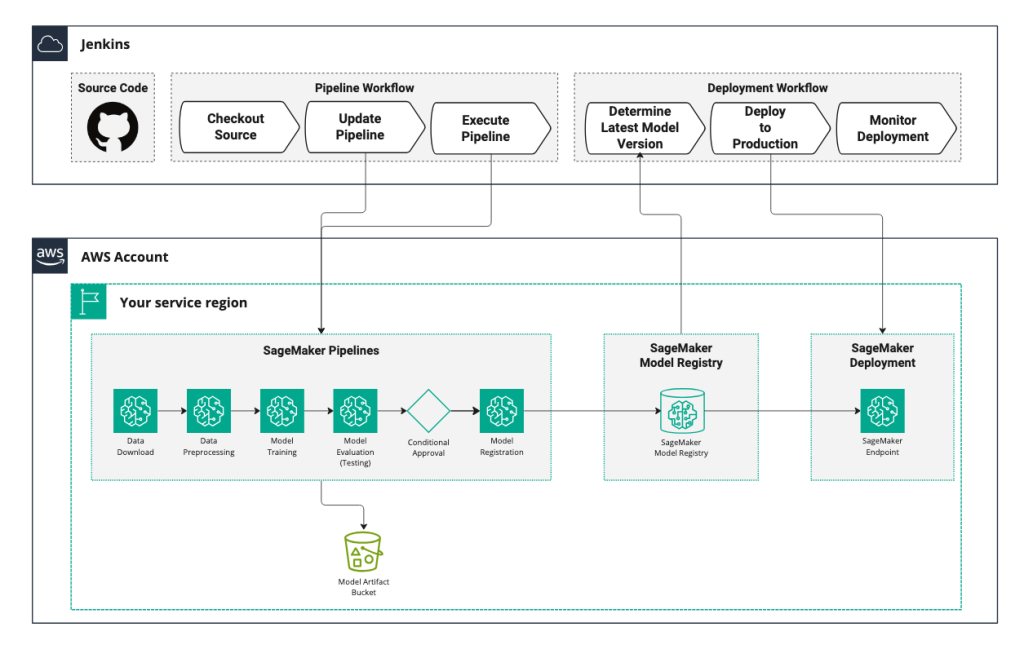

How Rapid7 automates vulnerability risk scores with ML pipelines using Amazon SageMaker AI

In this post, we share how Rapid7 implemented end-to-end automation for the training, validation, and deployment of ML models that predict CVSS vectors. Rapid7 customers have the information they need to accurately understand their risk and prioritize remediation measures.

Build secure RAG applications with AWS serverless data lakes

In this post, we explore how to build a secure RAG application using serverless data lake architecture, an important data strategy to support generative AI development. We use Amazon Web Services (AWS) services including Amazon S3, Amazon DynamoDB, AWS Lambda, and Amazon Bedrock Knowledge Bases to create a comprehensive solution supporting unstructured data assets which can be extended to structured data. The post covers how to implement fine-grained access controls for your enterprise data and design metadata-driven retrieval systems that respect security boundaries. These approaches will help you maximize the value of your organization’s data while maintaining robust security and compliance.

Advanced fine-tuning methods on Amazon SageMaker AI

When fine-tuning ML models on AWS, you can choose the right tool for your specific needs. AWS provides a comprehensive suite of tools for data scientists, ML engineers, and business users to achieve their ML goals. AWS has built solutions to support various levels of ML sophistication, from simple SageMaker training jobs for FM fine-tuning to the power of SageMaker HyperPod for cutting-edge research. We invite you to explore these options, starting with what suits your current needs, and evolve your approach as those needs change.

Streamline machine learning workflows with SkyPilot on Amazon SageMaker HyperPod

This post is co-written with Zhanghao Wu, co-creator of SkyPilot. The rapid advancement of generative AI and foundation models (FMs) has significantly increased computational resource requirements for machine learning (ML) workloads. Modern ML pipelines require efficient systems for distributing workloads across accelerated compute resources, while making sure developer productivity remains high. Organizations need infrastructure solutions […]

Implement user-level access control for multi-tenant ML platforms on Amazon SageMaker AI

In this post, we discuss permission management strategies, focusing on attribute-based access control (ABAC) patterns that enable granular user access control while minimizing the proliferation of AWS Identity and Access Management (IAM) roles. We also share proven best practices that help organizations maintain security and compliance without sacrificing operational efficiency in their ML workflows.

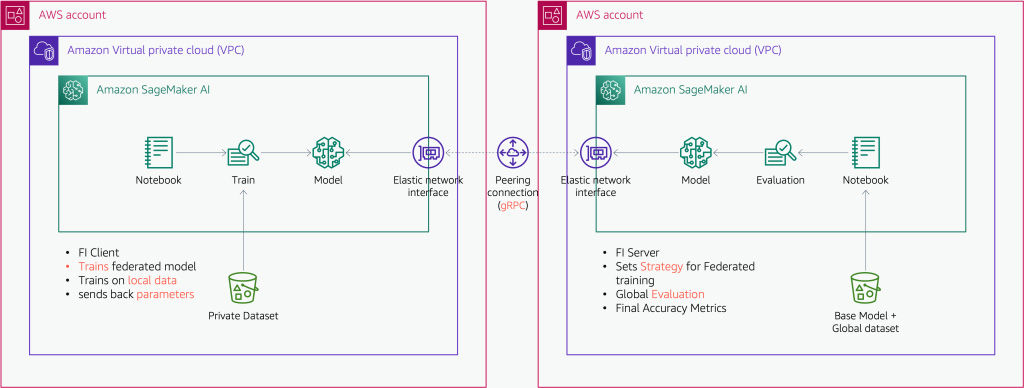

Fraud detection empowered by federated learning with the Flower framework on Amazon SageMaker AI

In this post, we explore how SageMaker and federated learning help financial institutions build scalable, privacy-first fraud detection systems.

New capabilities in Amazon SageMaker AI continue to transform how organizations develop AI models

In this post, we share some of the new innovations in SageMaker AI that can accelerate how you build and train AI models. These innovations include new observability capabilities in SageMaker HyperPod, the ability to deploy JumpStart models on HyperPod, remote connections to SageMaker AI from local development environments, and fully managed MLflow 3.0.

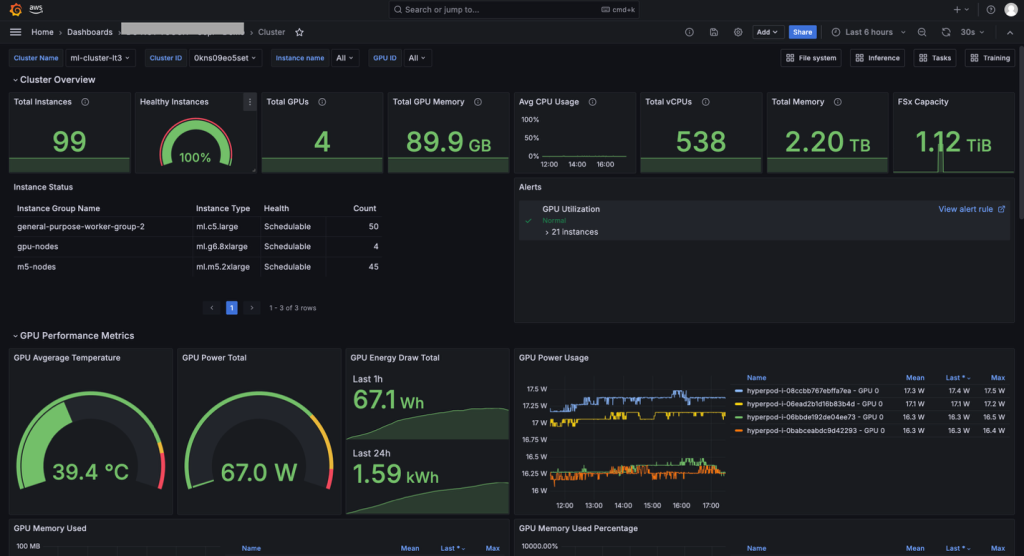

Accelerate foundation model development with one-click observability in Amazon SageMaker HyperPod

With a one-click installation of the Amazon Elastic Kubernetes Service (Amazon EKS) add-on for SageMaker HyperPod observability, you can consolidate health and performance data from NVIDIA DCGM, instance-level Kubernetes node exporters, Elastic Fabric Adapter (EFA), integrated file systems, Kubernetes APIs, Kueue, and SageMaker HyperPod task operators. In this post, we walk you through installing and using the unified dashboards of the out-of-the-box observability feature in SageMaker HyperPod. We cover the one-click installation from the Amazon SageMaker AI console, navigating the dashboard and metrics it consolidates, and advanced topics such as setting up custom alerts.